Page Rank Algorithm

I generally love reading famous algorithms and the work done by others as they set the platform for the new ideas. I was recently reading about the page rank algorithm which is the base for the creation of a huge giant like Google. Though it is a very old work and most of the junta is already aware of it, I would still like to go through it once again as it certainly gives a good feeling 😊.

What is Page Rank Algorithm?

It is a link analysis algorithm which ranks the various pages on the web according to their importance. It was invented by two stanford students, Larry Page and Sergey Brin in 1998. It is defined in a manner such that a page gets importance whenever an important page links to it. So, imagine the web as a network of nodes connected with each other, where the nodes are the pages and the edges are the links. The value of a node is propagated to its outlink by a mathematical formula. Let us discuss the algorithms step by step:

Initial Rank

Let us say the graph consists of N nodes. The graph can have multiple disconnected components too but we will discuss more about that later. Initial rank for each of the node will be 1/N.

Rank Updation

Rank of each of the page is updated by the following rule:

for nodes in inlinks:

rank = rank + (rank_of_nodes/num_of_outlinks_of_nodes)

We are iterating through all the inlinks to the page and then adding a part of the rank of its parent page. The part is nothing but a the rank of its parent page divided by the total number of outlinks of the parent page.

Disconnected Components and Dangling Nodes

Imagine having a node which does not have any outlinks, the importance of that page would not be able to propagate further. Similarly for disconnected components the rank propagation will not work. To handle this, a damping factor d is introduced. This damping factor is nothing but the probability of making a random walk if the surfer gets stuck somewhere. Since every node is equally probable, the contribution in rank from a random jump is 1/N.

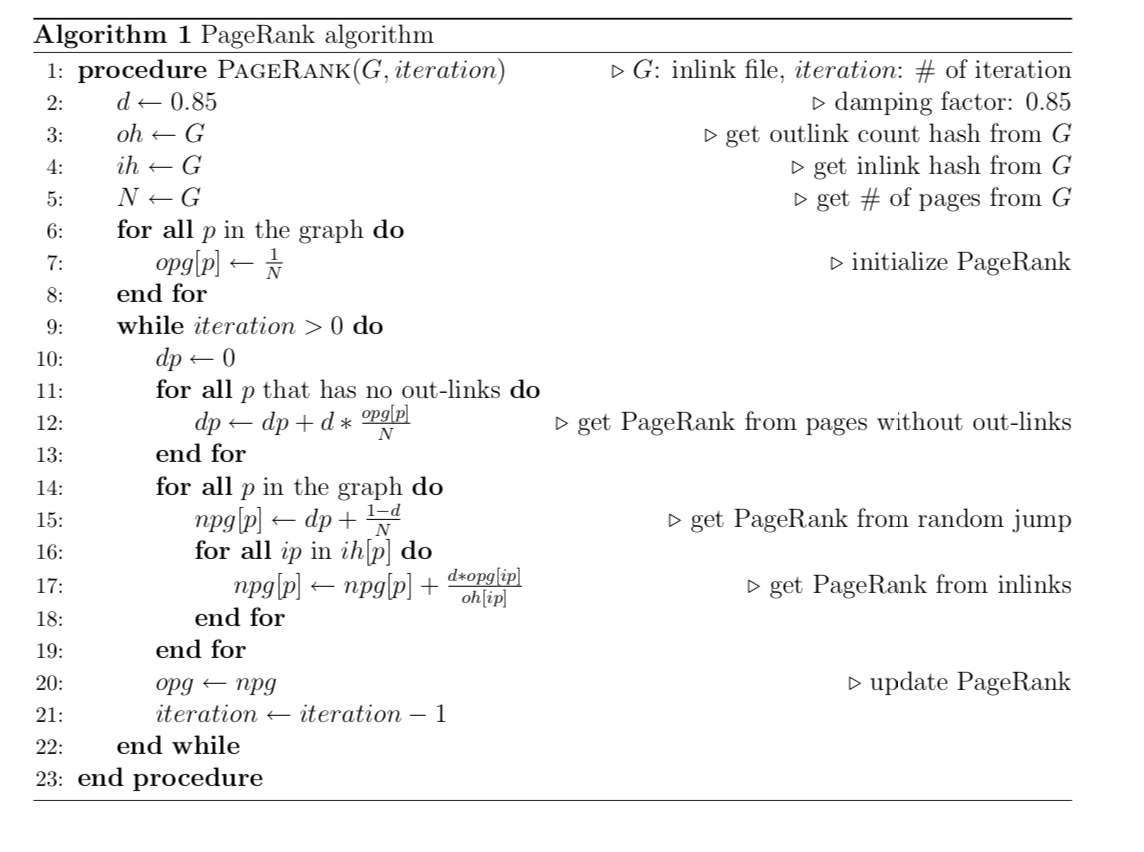

I will now be going through the pseudo code(given below) step by step and explaining the complete algorithm.

G : denotes the web as a graph

iteration : number of iterations for which we will be training the algorithm

d : the damping factor

N : total number of nodes or pages

oh : a hash table containing list of outlinks for each page

ih : a hash table containing list of inlinks for each page

First loop of the code initialises the rank of each node with the value 1/N.

Now for each iteration, we do the following:

we calculate the total rank contribution by the sink nodes which is denoted by dp. Then we update the rank value for each node as follows:

First updation will be taking the effect of random walk in consideration and then the usual updation rule described above applies. The ranks are updated till they converge and the surfer finally shows the pages according to the rank values in decreasing order.

Let us implement the algorithm for the graph shown below:

N = 6

d = 0.3

iterations = 50

oh = {0:[1],1:[2], 2:[0,1], 3:[2], 4:[5], 5:[4]}

ih = {0:[2],1:[0,2], 2:[1,3], 3:[], 4:[5], 5:[4]}

opg = [float(1/N) for i in range(N)]

The initial rank vector look like this:

[0.16666666666666666,0.16666666666666666,

0.16666666666666666,0.16666666666666666,

0.16666666666666666,0.16666666666666666]

Updation Part

for it in range(iterations):

dp = 0

for p in range(N):

if(len(oh[p])==0):

dp += (1-d)/N

npg = [0 for p in range(N)]

for p in range(N):

npg[p] = dp + (1-d)/N

for ilink in ih[p]:

npg[p] += ((d*opg[ilink]))/len(oh[ilink])

opg = npg

The updated rank vector after 50 iterations look like this:

[0.14807930607187111,0.19250309789343245,

0.2094175960346964,0.11666666666666665,

0.16666666666666666,0.16666666666666666]

That’s it, we are done for now 😊.

Keep Learning, Keep Sharing